The Media Lies, So Why Doesn't AI?

AI is fed lies from the media, so how can we trust it?

I’ll start with a concrete example.

In 2015, TIME amplified a Microsoft Canada marketing report claiming humans now have an “8-second attention span apparently worse than a goldfish.”

It went everywhere.

Let’s put the theory to the test and see if you can read this whole article without falling asleep. If you make it to the end comment “I did it!”. No cheating. I’ll know.

The problem?

The report wasn’t peer-reviewed cognitive science, and the goldfish comparison has no credible primary source.

Yet the meme persists a decade later.

And the article is still live 👉

If the media can oversimplify or outright mislead, why wouldn’t AI, which is trained on that media, do the same?

Short answer: It can and does.

Long answer: modern AI systems add multiple layers on top of raw training data to push away from fiction toward sourced answers.

Those layers don’t make models infallible, but they do give us levers to demand higher standards than a viral headline.

Why media lies (and how that affects AI and you)

Virality > nuance. Incentives favor catchy frames (like “goldfish”) over caveats. AI models trained on the open web ingest those headlines, too.

Secondary sourcing. Rewrites of rewrites launder weak claims until they look established; you still see fresh posts repeating the goldfish stat.

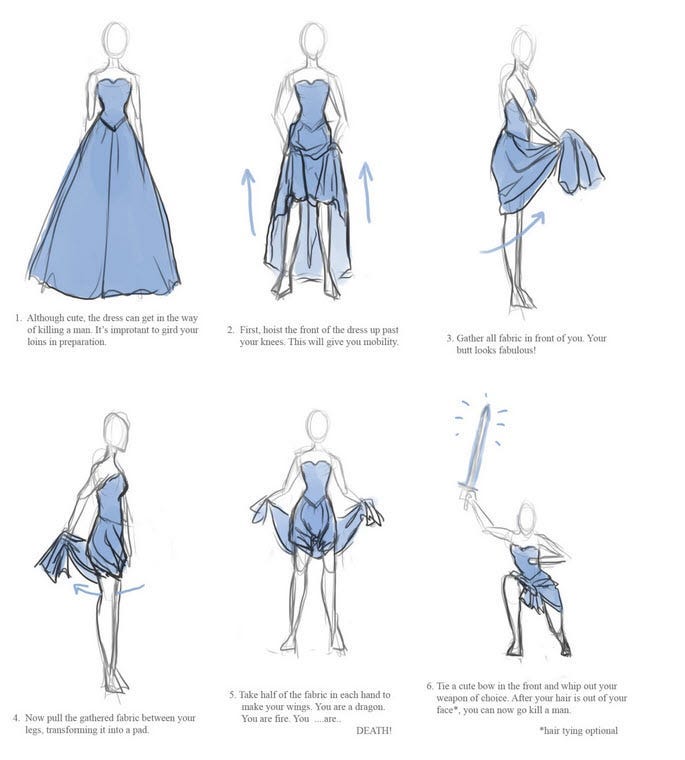

So yes, if you are not actively girding your loins as you browse the the internet, you’ll soak up lies as facts.

Girding your loins means to prepare yourself for a difficult or challenging task by bracing for it mentally or physically.

What actually stops “good” AI from “lying”

Key idea: today’s best practice is ground, align, and audit—not “trust the model’s memory.”

Grounding with retrieval (RAG).

Instead of relying only on what a model “remembers,” Retrieval-Augmented Generation pipes in live passages from a vetted corpus (docs, journals, your own knowledge base) and conditions the answer on those sources. This reduces fabrication and enables citations. Classic paper: RAG (Lewis et al., 2020). arxiv.orgAlignment with human (and rule-based) feedback.

Models are tuned to prefer helpful, honest behavior and to refuse when uncertain. A landmark approach is RLHF (Ouyang et al., 2022). Newer methods add rule-based rewards and constitutional principles so models internalize “don’t make stuff up if you can’t justify it.” arxiv.orgBehavior specs + safety policies.

Vendors publish explicit “model specs” for desired conduct: cite when possible, avoid definitive claims without evidence, ask for clarification, etc. That policy scaffolding is part of how ChatGPT behaves. OpenAIIndependent evaluation.

Benchmarks like HELM stress-test models across accuracy, robustness, and safety so we can compare transparency and failure modes, not just raw “smarts.” arxiv.orgPost-deployment guardrails.

Threat models evolve (prompt injection, jailbreaks). Labs add filtering, secondary “classifiers,” and red-teaming to catch bad outputs before you see them. (Even then, perfection is unrealistic; this is an arms race.) Financial Times

But let’s be adults: AI still hallucinates

LLMs predict the next token; they don’t possess a built-in truth oracle.

Under pressure ambiguous prompts, missing context, long chains of facts models can produce confident nonsense (“hallucinations”).

Surveys and recent reviews catalog where and why this happens, plus mitigation strategies. The industry consensus: you can shrink hallucinations, not get rid of them. arxiv.org

So who makes these rules AI follows, and why should we trust them?

Model makers (labs).

They decide training data, safety tuning, and behavioral guardrails (e.g., “model specs,” RLHF, Constitutional AI). OpenAI publishes a public Model Spec describing desired behaviors and trade-offs; Anthropic’s Constitutional AI papers show how a written “constitution” steers models away from harmful outputs. These are voluntary but visible, and you can read them. OpenAIDeployers (your company / platform).

The org that uses a model sets product policies, retrieval sources, logging, and refusal thresholds. Good deployers “ground” answers in vetted sources (RAG), require citations, and add their own safety checks. (This is increasingly considered best practice, not just a nice-to-have.) NISTRegulators & governments.

The EU AI Act is now law with staged obligations (bans since Feb 2, 2025; GPAI rules Aug 2025; most high-risk obligations by 2026-2027). It’s enforceable with big fines, so it meaningfully constrains how models must behave in Europe. U.S. federal policy has been in flux (Biden’s 2023 EO set guardrails; the incoming administration changed course in Jan 2025), but agencies still publish AI governance requirements for their own use. EU CommissionStandards bodies (soft law).

NIST’s AI Risk Management Framework (voluntary, widely referenced) and ISO/IEC 42001 (an auditable AI management system standard) give checklists for risk, transparency, and accountability that companies can be assessed against. The OECD AI Principles guide many national policies. NISTCivil society & industry consortia.

Groups like Partnership on AI publish practical frameworks (e.g., Responsible Synthetic Media) that many companies pledge to follow, with public case studies. This isn’t law, but it creates reputational and ecosystem pressure. Partnership on AI

Why should we trust any of them?

In short, don’t.

This is what you should be asking:

Transparency: Are behavior rules public (e.g., a model spec)? Are training/usage limits explained? Are citations required for factual claims? OpenAI

Enforceability: Are there legal hooks (EU AI Act) or certifiable standards (ISO/IEC 42001)? Can regulators fine or auditors fail an organization? EU Commission

Evaluation: Are models stress-tested on open benchmarks (e.g., Stanford’s HELM) so outsiders can compare failure modes—not just hype scores? Stanford

Accountability: Is there an incident pathway (bug reports, appeals), usage logging, and the ability to reproduce the answer chain (prompt → sources → output)? NIST’s RMF strongly pushes this discipline. NIST

The checklist for when you actually need the truth

Ask for sources and make the model quote them.

“Cite at least two reputable sources and show the exact lines you used.” RAG + citations beats vibes. (And yes check the links.)Constrain the knowledge base.

When stakes are high, ground the model on a curated corpus (docs you trust, primary literature) instead of the open web. This is where RAG shines. arxiv.orgForce an uncertainty call.

Require the model to say “I don’t know / insufficient evidence” and propose how to verify (experts to ask, datasets to query). This behavior is encouraged by alignment methods. arxiv.orgCross-examination.

Have the model produce two answers from different sources and ask it to reconcile disagreements, noting which claim is less supported. (This mirrors adversarial evaluation.)Guard against injection.

If you let the model browse, sandbox sources and block instructions from untrusted pages. (Prompt-injection is real.) Financial TimesLog and audit.

Keep a trail: prompt → retrieved passages → final answer. If something’s off, you can trace the failure to retrieval, synthesis, or policy.

Why you shouldn’t trust any new model

Brand-new facts / breaking news (no time for the good sources to percolate).

Long, citation-heavy claims without inline evidence.

Topics flooded with SEO spam or advocacy copy (nutrition fads, miracle cures).

Questions that rely on legal/medical nuance—use AI for structure, not the final word.

The media lies (or at best over-simplifies) because attention economics reward it.

AI doesn’t “have to” lie because we can architect for truth-seeking: constrain sources, incentivize refusals, and evaluate relentlessly.

But that takes responsibility and conscious effort.

Even so, it still fails, but unlike the media headlines that linger we can take those failures and learn from them.

When in doubt, don’t trust, and always verify.

After all I’m just some guy on the internet.